Dans cette section

What is MCP in AI? Everything you wanted to ask

What is MCP?

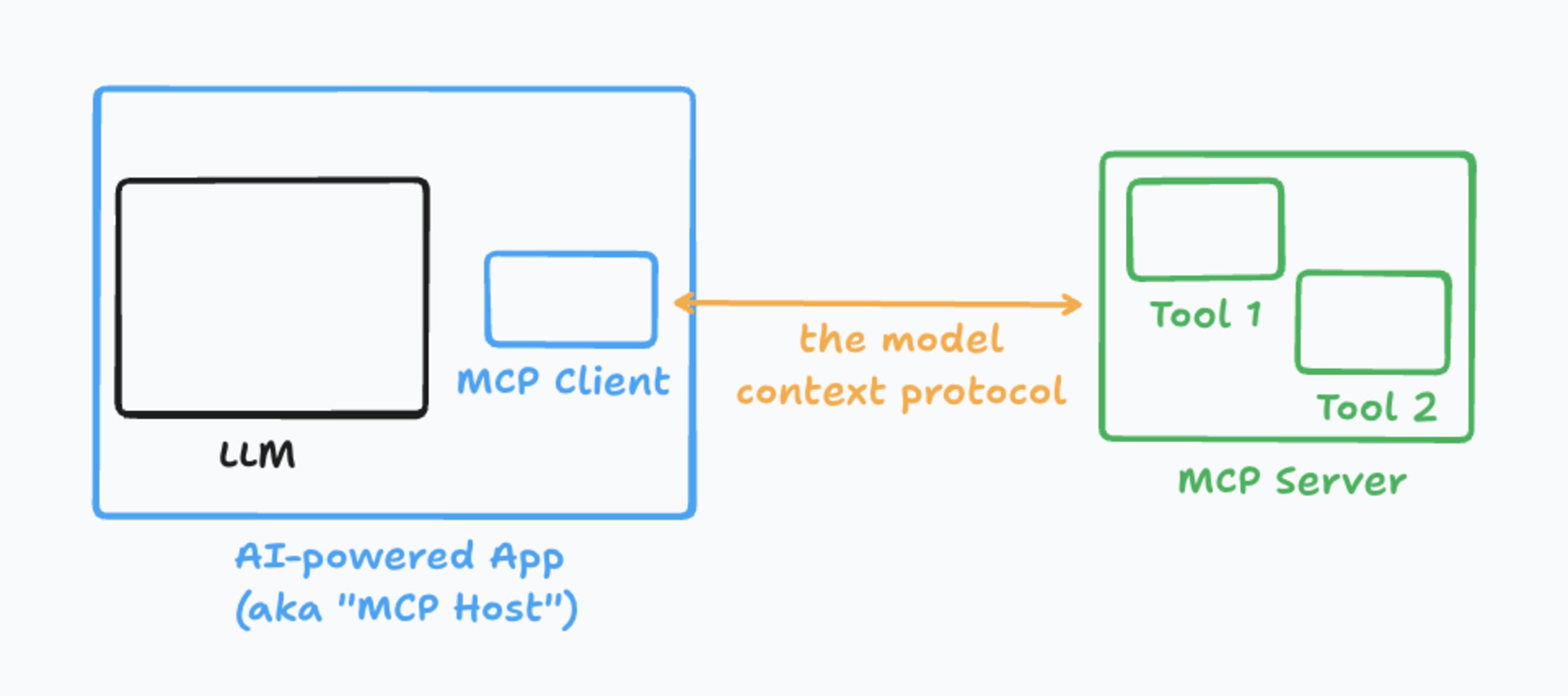

MCP stands for Model Context Protocol, and it is Anthropic’s specification for how LLMs (large language models) would communicate, share data, and leverage external resources beyond the model’s data.

MCP was announced in November 2024 but significantly impacted the industry. It rallied developers to build different kinds of capabilities that can be exposed to models and augment and extend their reach to perform beyond their scope of just text-in and text-out. A new capability is now unlocked by enabling models to also take actions and derive data from sources they were not trained on, which fuels the model’s autonomy to perform tasks.

The MCP specification formally defines the “AI-powered App” as an MCP Host. However, that might be confusing given the “server” terminology, so we’re using “AI-powered application” throughout this article for the most part.

Who creates the MCP client?

Usually, AI-powered applications allow users to add MCP servers with which they can interact. As such, the MCP client is often implemented by the AI-powered application, and the third-party capability is provided by developers who build MCP servers.

Some examples of AI-powered applications, also known as MCP Hosts, are:

Claude Desktop

Cursor

Qodo AI

These AI applications implement an MCP client to which you can add different MCP servers for various functionalities, such as managing your Git workflows and performing web searches.

Where can I find MCP servers?

Anthropic maintains a repository to track community-contributed MCP servers. In the @modelcontextprotocol/servers repository, you can find reference implementations of MCP servers and third-party contributions made by individuals.

Security disclaimer:

You should treat unofficial and unaudited MCP server code with high caution due to the potential malware, supply chain security concerns, and insecure coding practices that may be part of such MCP server code and impact your infrastructure and AI applications.

You can also build your own MCP server. Anthropic provides MCP SDKs, such as the popular JavaScript SDK @modelcontextprotocol/sdk to allow developers to build their own MCP servers that expose tools for LLM usage.

Following is an example of a simplified MCP server code implemented in JavaScript:

import { McpServer, ResourceTemplate } from "@modelcontextprotocol/sdk/server/mcp.js";

import { StdioServerTransport } from "@modelcontextprotocol/sdk/server/stdio.js";

import { z } from "zod";

// Create an MCP server

const server = new McpServer({

name: "Demo",

version: "1.0.0"

});

// Add an addition tool

server.tool("add",

{ a: z.number(), b: z.number() },

async ({ a, b }) => ({

content: [{ type: "text", text: String(a + b) }]

})

);What are some security considerations for MCP adoption?

The following is not an exhaustive list of security risks when adopting MCP servers to empower your AI-powered applications. Still, it provides an overview and practical examples of security concerns and security vulnerabilities that can manifest in MCP servers:

Access Control - If you expose an MCP resource in an MCP server, how do you differentiate between users and their roles in manipulating the LLM via the AI-powered application to reach and gather data exposed via an MCP resource?

Secure Coding - MCP servers are just more software, and that software can inadvertently be programmed in an insecure manner, just as building non-MCP related applications requires developers to follow secure coding conventions such as defenses against path traversal and code injection. Similarly, an MCP server may expose a tool to read files, but if files should be constrained to just a parent directory, how do you ensure your code isn’t mistakenly vulnerable to the classic ../ path traversal attack?

How do the MCP server and client communicate?

The data exchange format is based on JSON-RPC 2.0 with a detailed MCP specification that standardizes an optimal communication protocol for LLMs and AI-based workflows.

The JSON-RPC communication protocol can be established over one of two types of communication channels between an MCP server and an MCP client:

A system process’s standard I/O - With the goal and benefit of enabling locally runnable MCP servers (helpful for security and privacy concerns, among other advantages such as latency), this communication channel spins up a system process (such as

npx <mcp-server>). While the process is actively running, data and commands are exchanged over the process’sstdout,stdio, andstderrfacilities.HTTP networking with Server-Sent Events (SSE) - Primarily designed for communicating with remotely deployed MCP servers that can augment an AI-powered application.

Can the MCP server provide data?

Yes. More than just abstract tools, which are general sets of capabilities, MCP servers can also specifically define data sources and, even more so, suggest a set of prompts that LLMs can leverage as a prompt engineering technique to drive action and data gathering.

MCP servers can provide the following set of capabilities that expand what LLM can do:

MCP Resources - Fundamentally, resources are different data sources that may be made available to LLMs. For example, an MCP server can expose the contents of a file or the listing of files in a directory as a data resource for an LLM. In a way, you can think of MCP resources as a sort of RAG that further enhances and extends the LLM’s data sources.

MCP Prompts - Users of AI-powered applications may not be aware of the entire set of capabilities available to them; as such, MCP servers can expose a list of text prompts that MCP clients can communicate back to end users based on semantic meaning, allowing users to use these prompts directly to drive specific actions or gather data.

MCP Tools - Arguably the most popular use-case for MCP servers- allow LLMs to perform actions. Before MCP, LLMs could generate text as a response but couldn’t search the web. They didn’t have access to perform actions. This changed with the introduction of MCPs (more accurately, with OpenAI’s precursor to MCP being function calling). MCP servers can now implement the entire action that operates a robot’s hand or type keys in a search text box in the browser and expose this capability to the LLM, which can trigger those actions.

How can I debug the MCP server and client communication?

Developers who build an MCP server and implement it via the HTTP transport can still rely on output logs to the console, as JavaScript developers are commonly used to. However, this won’t work for STDIO (Standard Input/Output) in-process communication transport of MCP servers because the STDIO channels are exclusively used for the protocol as well as that the MCP server is executed as a sub-process of the MCP host and not as a command-line tool that you’d normally interact with on a shell (technically, a TTY).

Claude primarily recommends and makes the following methodologies and tools available for debugging an MCP server:

The MCP Inspector - The MCP Inspector is a dedicated command-line tool that intercepts communication between the MCP client and the MCP server and allows you to inspect, debug, track, and pull information and insights from the communication channel. An example use-case for how you’d use the JavaScript MCP inspector available through the npm registry:

npx @modelcontextprotocol/inspector <command> <arg1> <arg2>Note: a PyPI package also exists for developers building MCP servers in Python.

Logging facility - A helpful practice is to use a dedicated logging facility, such as a local log file system or network-based remote logging service, to collect information, either from an HTTP-based MCP server or from an STDIO-based MCP server. Notably, maintaining file logs is inherently more accessible and relevant for MCP servers communicating over STDIO.

How is MCP different from OpenAI’s Tools?

Tools, also known as function use in other APIs and frameworks usage, enable agentic workflows by exposing capabilities to the model that it can execute. Tools are the implementation detail, whereas the Model Context Protocol is a higher-level specification that sets up contracts and standards for defining such tools and functions.

Is MCP like GraphQL?

When MCPs were announced and gained popularity, developers debated whether they were essentially re-inventing GraphQL—an alternative to RESTful APIs and a JSON-RPC-based communication and query language. While similarities exist, MCPs are not intended to replace, replicate, or draw from GraphQL.

MCPs are purposely built to leverage and enhance LLMs' autonomous behavior and the available data. Before LLMs, the OpenAI and Agentic AI workflows for function calling or tool calling left developers to build such capabilities without any standard way for what the interface was like, and discoverability remained to be decided based on the model and the framework developers chose to use. MCPs came to solve that and provide a standardized communication platform that offers enhanced actions and data through self-discovering features.

Interested in learning more about how to build your applications with AI? Explore 10 best practices for developing securely with AI.

Suivez les bonnes pratiques pour un développement sûr avec l'IA

Découvrez nos 10 conseils pour atténuer efficacement les risques de l’IA tout en tirant le plein parti de ses avantages en matière de développement.