10 Docker Security Best Practices

January 8, 2025

0 mins readKey aspects of Docker security

Docker security refers to the build, runtime, and orchestration aspects of Docker containers. It includes the Dockerfile security aspects of Docker base images, as well as the Docker container security runtime aspects—such as user privileges, Docker daemon, proper CPU controls for a container, and further concerns around the orchestration of Docker containers at scale.

The state of Docker container security unfolds into 4 main Docker security issues:

Dockerfile security and best practices

Docker container security at runtime

Supply chain security risks with Docker Hub and how they impact Docker container images

Cloud native container orchestration security aspects related to Kubernetes and Helm

Container security for DevSecOps

Find and fix container vulnerabilities for free with Snyk.

10 Docker security best practices

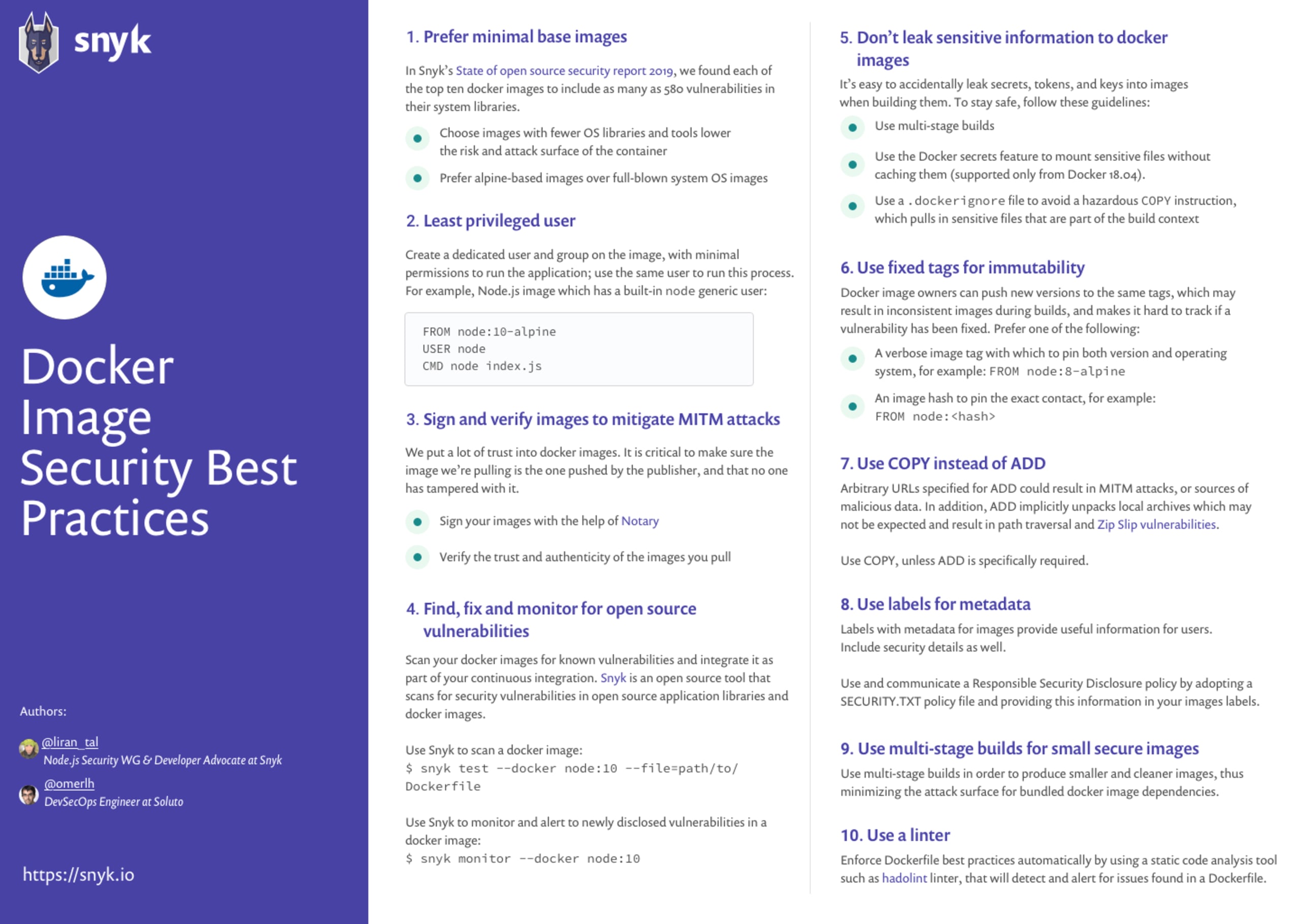

1. Prefer minimal base images

A common docker container security issue is that you end up with big images for your docker containers. In general, the more software your container has, the bigger the potential attack surface, so the principle should always be to only include the absolute minimum that your application requires to run. The path of least resistance is often to use a generic Docker container image to get things running quickly. This might be an operating system image like Debian or an official runtime image such as Node. If your project doesn’t require general system libraries or system utilities, then it is better to avoid using a full blown operating system (OS) as a base image.

Generic runtime images, by their nature, contain everything needed to run applications under most circumstances and likely contain huge amounts of software that your application does not use. The best practice is to take advantage of multi-stage builds, perhaps using a generic image during the build that contains the build tools and then transferring the built application into a slim image that contains a minimal environment suitable for production use.

You can also consider using specialized container distributions such as the distroless images from Google, or Alpine Linux, which is much smaller by default. For compiled languages such as Go or C, you can even create completely empty containers by specifying scratch in the Dockerfile, since these kinds of binaries may have no external dependencies at all.

2. Least privileged user

When a Dockerfile doesn’t specify a USER, it defaults to executing the container using the root user. In practice, there are very few reasons why the container should have root privileges and it could very well manifest as a docker security issue. Docker defaults to running containers using the root user. When that namespace is then mapped to the root user in the running container, it means that the container potentially has root access on the Docker host. Having an application on the container run with the root user enables an easier path to privilege escalation if the application itself is vulnerable to exploitation.

To minimize exposure, create a dedicated user and a dedicated group in the Docker image for the application; use the USER directive in the Dockerfile to ensure the container runs the application with the least privileged access possible.

Remember that any new users or groups might not exist in the image; make sure you create them using the instructions in the Dockerfile.

The following demonstrates a complete example of how to do this for a generic Ubuntu image:

1FROM ubuntu

2RUN mkdir /app

3RUN groupadd -r lirantal && useradd -r -s /bin/false -g lirantal lirantal

4WORKDIR /app

5COPY . /app

6RUN chown -R lirantal:lirantal /app

7USER lirantal

8CMD node index.jsThe example above:

creates a system user (-r) with no password, no home directory set, and no shell

adds the user we created to an existing group that we created beforehand (using groupadd)

adds a final argument set to the user name we want to create in association with the group we created

If you’re a fan of Node.js and alpine images, they already bundle a generic user for you called node. Here’s a Node.js example, making use of the generic node user:

1FROM node:10-alpine

2RUN mkdir /app

3COPY . /app

4RUN chown -R node:node /app

5USER node

6CMD [“node”, “index.js”]If you’re developing Node.js applications, you may want to consult with the official Docker and Node.js Best Practices.

Container security for DevSecOps

Find and fix container vulnerabilities for free with Snyk.

3. Sign and verify images to mitigate MITM attacks

The authenticity of Docker images is a challenge. We put a lot of trust into these images as we are literally using them as the container that runs our code in production. Therefore, it is critical to make sure the image we pull is the one that is pushed by the publisher and that no party has modified it. Tampering may occur over the wire, between the Docker client and the registry, or by compromising the registry of the owner’s account to push a malicious image.

The signing of images and other related artifacts is a key topic in securing your software supply chain, and the technologies in this space are rapidly maturing. Currently, the two most commonly used tools for doing this are:

Docker Notary v1, which is the basis for Docker Content Trust (DCT) functionality built into the Docker CLI

SigStore project, including its cosign tool, implements simple signing, storage, and verification of artifacts.

Docker Content Trust

Docker Content Trust (DCT) has been around for several years and allows image authors to sign the tags they push to supported image registry servers that implement the Docker Notary API, like DockerHub. When enabled on your Docker host, DCT will block any image from being pulled, pushed, or run if its signature cannot be verified.

Signing your container image with DCT is simple: just set the environment variable `DOCKER_CONTENT_TRUST=1`, and when you push your image to DockerHub or any other Notary-enabled image registry, Docker will take care of applying the signature and storing it with the image tag

1❯ export DOCKER_CONTENT_TRUST=1

2❯ docker push myaccount/myimage:1

3The push refers to repository [docker.io/myaccount/myimage]

464ab2aa20cf9: Layer already exists

5b99937123ca0: Layer already exists

6…

7orig: digest: sha256:c221d4dc80b8a0d3866602020b09722d942157c720273d325a0496a529b5fcab size: 4093

8Signing and pushing trust metadata

9You are about to create a new root signing key passphrase. This passphrase

10will be used to protect the most sensitive key in your signing system. Please

11choose a long, complex passphrase and be careful to keep the password and the

12key file itself secure and backed up. It is highly recommended that you use a

13password manager to generate the passphrase and keep it safe. There will be no

14way to recover this key. You can find the key in your config directory.

15Enter passphrase for new root key with ID 85871b5:

16Repeat passphrase for new root key with ID 85871b5:

17Enter passphrase for new repository key with ID e675351:

18Repeat passphrase for new repository key with ID e675351:

19Finished initializing "docker.io/ericsmalling/javagoof"

20Successfully signed docker.io/myaccount/myimage:1

21At container startup time, signature enforcement can be implemented simply by setting the same environment variable, and the Docker CLI will refuse to pull or start a container image tag that is unsigned or has an invalid signature.

❯ docker run --rm -it myaccount/someapp docker: Error: remote trust data does not exist for docker.io/myaccount/someapp: notary.docker.io does not have trust data for docker.io/myaccount/someapp.

See 'docker run --help'.More complicated topics like key revocations are handled with the notary binary tool. For more on Notary v1 and DCT, check the official documentation.

Sigstore

The Sigstore project tools aim to address not only container image signatures but also any artifacts you might want to sign, such as a software bill of materials (SBOM), attestations about an artifact (i.e. build metadata), or even a non-image binary file or “blob.”

In its simplest use case, signing an image is a simple operation of running the `cosign sign` binary and passing the registry and image:tag you want to sign. A corresponding `cosign verify` command will validate the signature.

❯ `Generate keypair (do this once):

❯ cosign generate-key-pair

Enter password for private key:

Enter password for private key again:

Private key written to cosign.key

Public key written to cosign.pub

❯ `Sign the already-pushed image

❯ cosign sign --key cosign.key myaccount/myimage:1 Enter password for private key:

tlog entry created with index: 1234567

Pushing signature to: index.docker.io/myaccount/myimage

As of v1.9, cosign requires you to generate and manage your keypairs yourself, and while they also offer a very easy-to-use `generate-key-pair` command (shown above), in the next GA release, it will support a “keyless” signature function. This works using an OpenID Connect (also known as OIDC for short) authorization mechanism that will automatically generate ephemeral key pairs that have your email address annotated into them. This functionality is available now by setting `COSIGN_EXPERIMENTAL=1` before running your `sign` or `verify` commands.

❯ export COSIGN_EXPERIMENTAL=1

❯ cosign sign myaccount/myimage:1

Generating ephemeral keys...

Retrieving signed certificate...

Note that there may be personally identifiable information associated with this signed artifact.

This may include the email address associated with the account with which you authenticate.

This information will be used for signing this artifact and will be stored in public transparency logs and cannot be removed later.

By typing 'y', you attest that you grant (or have permission to grant) and agree to have this information stored permanently in transparency logs.

Are you sure you want to continue? (y/[N]): y

Your browser will now be opened to:

https://oauth2.sigstore.dev/auth/auth?access_type=online&client_id=sigstore&code_challenge=********************

Successfully verified SCT...

tlog entry created with index: 1234567

Pushing signature to: index.docker.io/myaccount/myimageAnother thing to consider with image signatures is how you will be running the containers. For most of us, Kubernetes is our platform of choice, and it does not have native support for DCT, so unless you are using a specific distribution that implements it, you are going to need to provide some form of runtime enforcement. Fortunately, the Kubernetes admission controller API can be leveraged to do this, and open source projects like Connaisseur can take care of this for DCT / Notary v1 as well as Cosign signatures.

4. FIND, FIX AND MONITOR FOR OPEN SOURCE VULNERABILITIES

When we choose a base image for our Docker container, we indirectly take upon ourselves the risk of all the container security concerns that the base image is bundled with. This can be poorly configured defaults that don’t contribute to the security of the operating system, as well as system libraries that are bundled with the base image we chose.

A good first step is to make use of as minimal a base image as is possible while still being able to run your application without issues. This helps reduce the attack surface by limiting exposure to vulnerabilities; on the other hand, it doesn’t run any audits on its own, nor does it protect you from future vulnerabilities that may be disclosed for the version of the base image that you are using.

Therefore, one way of protecting against vulnerabilities in open source security software is to use tools such as Snyk, to add continuous docker security scanning and monitoring of vulnerabilities that may exist across all of the Docker image layers that are in use.

Scan a Docker image for known vulnerabilities with these commands:

1# fetch the image to be tested so it exists locally

2$ docker pull node:10

3# scan the image with snyk

4$ snyk test --docker node:10 --file=path/to/DockerfileMonitor a Docker image for known vulnerabilities so that once newly discovered vulnerabilities are found in the image, Snyk can notify and provide remediation advice:

1$ snyk monitor --docker node:10Based on scans performed by Snyk users, we found that 44% of Docker image scans had known vulnerabilities, and for which there were newer and more secure base images available. This remediation advice is unique to Snyk, based on which developers can take action and upgrade their Docker images.

Snyk also found that for 20% out of all Docker image scans, only a rebuild of the Docker image would be necessary in order to reduce the number of vulnerabilities.

How to audit container security?

It is an essential task to scan your Linux-based container project for known vulnerabilities to ensure the security of your environment. To achieve this, Snyk scans the base image, he operating system (OS) packages installed and managed by the package manager and key binaries inlayers that were not installed through the package manager.

Snyk container vulnerability management tool, offers remediation advice and guidance for public Docker Hub images by indicating base image recommendation, Dockerfile layer in which a vulnerability was found and more.

5. DON’T LEAK SENSITIVE INFORMATION TO DOCKER IMAGES

Sometimes, when building an application inside a Docker image, you need secrets such as an SSH private key to pull code from a private repository, or you need tokens to install private packages. If you copy them into the Docker intermediate container they are cached on the layer to which they were added, even if you delete them later on. These tokens and keys must be kept outside of the Dockerfile.

Using multi-stage builds

Another aspect of improving docker container security is through the use of multi-stage builds. By leveraging Docker support for multi-stage builds, fetch and manage secrets in an intermediate image layer that is later disposed of so that no sensitive data reaches the image build. Use code to add secrets to said intermediate layer, such as in the following example:

1FROM ubuntu as intermediate

2

3WORKDIR /app

4COPY secret/key /tmp/

5RUN scp -i /tmp/key build@acme/files .

6

7FROM ubuntu

8WORKDIR /app

9COPY --from=intermediate /app .Using Docker BuildKit secrets

Use a BuildKit feature in Docker for managing secrets to mount sensitive files without caching them, similar to the following:

1# syntax = docker/dockerfile:1.0-experimental

2FROM alpine

3

4# shows secret from default secret location

5RUN --mount=type=secret,id=mysecret cat /run/secrets/mysecre

6

7# shows secret from custom secret location

8RUN --mount=type=secret,id=mysecret,dst=/foobar cat /foobarRead more about Docker build secrets on their site.

Beware of recursive copy

You should also be mindful when copying files into the image that is being built. For example, the following command copies the entire build context folder, recursively, to the Docker image, which could end up copying sensitive files as well:

1COPY . .If you have sensitive files in your folder, either remove them or use .dockerignore to ignore them:

1private.key

2appsettings.jsonHow do you protect a docker container?

Ensure you use multi-stage builds so that the container image built for production is free of development assets and any secrets or tokens.

Furthermore, ensure you are using a container security tool to scan your docker images from the CLI, directly from Docker Hub, or those deployed to production using Amazon ECR, Google GCR or others.

6. Use fixed tags for immutability

Each Docker image can have multiple tags, which are variants of the same images. The most common tag is latest, which represents the latest version of the image. Image tags are not immutable, and the author of the images can publish the same tag multiple times.

This means that the base image for your Docker file might change between builds. This could result in inconsistent behavior because of changes made to the base image. There are multiple ways to mitigate this issue and improve your Docker security posture:

Prefer the most specific tag available. If the image has multiple tags, such as :8 and :8.0.1 or even :8.0.1-alpine, prefer the latter, as it is the most specific image reference. Avoid using the most generic tags, such as latest. Keep in mind that when pinning a specific tag, it might be deleted eventually.

To mitigate the issue of a specific image tag becoming unavailable and becoming a show-stopper for teams that rely on it, consider running a local mirror of this image in a registry or account that is under your own control. It’s important to take into account the maintenance overhead required for this approach—because it means you need to maintain a registry. Replicating the image you want to use in a registry that you own is good practice to make sure that the image you use does not change.

Be very specific! Instead of pulling a tag, pull an image using the specific SHA256 reference of the Docker image, which guarantees you get the same image for every pull. However notice that using a SHA256 reference can be risky, if the image changes that hash might not exist anymore.

Are Docker images secure?

Docker images might be based on open source Linux distributions, and bundle within them open source software and libraries. A recent state of open source security research conducted by Snyk found that the top most popular docker images contain at least 30 vulnerabilities.

7. Use COPY instead of ADD

Docker provides two commands for copying files from the host to the Docker image when building it: COPY and ADD. The instructions are similar in nature, but differ in their functionality and can result in a Docker container security issues for the image:

COPY — copies local files recursively, given explicit source and destination files or directories. With COPY, you must declare the locations.

ADD — copies local files recursively, implicitly creates the destination directory when it doesn’t exist, and accepts archives as local or remote URLs as its source, which it expands or downloads respectively into the destination directory.

While subtle, the differences between ADD and COPY are important. Be aware of these differences to avoid potential security issues:

When remote URLs are used to download data directly into a source location, they could result in man-in-the-middle attacks that modify the content of the file being downloaded. Moreover, the origin and authenticity of remote URLs need to be further validated. When using COPY the source for the files to be downloaded from remote URLs should be declared over a secure TLS connection and their origins need to be validated as well.

Space and image layer considerations: using COPY allows separating the addition of an archive from remote locations and unpacking it as different layers, which optimizes the image cache. If remote files are needed, combining all of them into one RUN command that downloads, extracts, and cleans-up afterwards optimizes a single layer operation over several layers that would be required if ADD were used.

When local archives are used, ADD automatically extracts them to the destination directory. While this may be acceptable, it adds the risk of zip bombs and Zip Slip vulnerabilities that could then be triggered automatically.

8. Use metadata labels

Image labels provide metadata for the image you’re building. This help users understand how to use the image easily. The most common label is “maintainer”, which specifies the email address and the name of the person maintaining this image. Add metadata with the following LABEL command:

1LABEL maintainer="me@acme.com"In addition to a maintainer contact, add any metadata that is important to you. This metadata could contain: a commit hash, a link to the relevant build, quality status (did all tests pass?), source code, a reference to your SECURITY.TXT file location and so on.

It is good practice to adopt a SECURITY.TXT (RFC5785) file that points to your responsible disclosure policy for your Docker label schema when adding labels, such as the following:

1LABEL securitytxt="https://www.example.com/.well-known/security.txt"See more information about labels for Docker images: http://label-schema.org/rc1/

9. Use multi-stage build for small and secure docker images

While building your application with a Dockerfile, many artifacts are created that are required only during build-time. These can be packages such as development tooling and libraries that are required for compiling, or dependencies that are required for running unit tests, temporary files, secrets, and so on.

Keeping these artifacts in the base image, which may be used for production, results in an increased Docker image size, and this can badly affect the time spent downloading it as well as increase the attack surface because more packages are installed as a result. The same is true for the Docker image you’re using—you might need a specific Docker image for building, but not for running the code of your application.

Golang is a great example. To build a Golang application, you need the Go compiler. The compiler produces an executable that runs on any operating system, without dependencies, including scratch images.

This is a good reason why Docker has the multi-stage build capability. This feature allows you to use multiple temporary images in the build process, keeping only the latest image along with the information you copied into it. In this way, you have two images:

First image—a very big image size, bundled with many dependencies that are used in order to build your app and run tests.

Second image—a very thin image in terms of size and number of libraries, with only a copy of the artifacts required to run the app in production.

10. Use a linter

Adopt the use of a linter to avoid common mistakes and establish best practice guidelines that engineers can follow in an automated way. This is a helpful docker security scanning task to statically analyze Dockerfile security issues.

One such linter is hadolint. It parses a Dockerfile and shows a warning for any errors that do not match its best practice rules.

Hadolint is even more powerful when it is used inside an integrated development environment (IDE). For example, when using hadolint as a VSCode extension, linting errors appear while typing. This helps in writing better Dockerfiles faster.

Container security for DevSecOps

Find and fix container vulnerabilities for free with Snyk.

How do you harden a docker container image?

You may use linters such as hadolint or dockle to ensure the Dockerfile has secure configuration. Make sure you also scan your container images to avoid vulnerabilities with a severe security impact in your production containers.

Is Docker a security risk?

Docker is a technology for software virtualization that has gained popularity and widespread adoption. When we build and deploy Docker, we need to do so with security best practices in mind in order to mitigate concerns such as security vulnerabilities bundled with the Docker base images or data breaches due to misconfigured Docker containers.

How do I secure a Docker container?

Follow Docker security best practices to ensure you are using Docker base images with least or no known vulnerabilities, Dockerfile security settings, and monitor your deployed containers to ensure there is no image drift between dev and production. Furthermore, ensure you follow Infrastructure as Code best practices for container orchestration solutions.

Docker security: additional resource

To wrap up, if you want to keep up with security best practices for building optimal Docker images for Node.js and Java applications:

Are you a Java developer? You’ll find this resource valuable: Docker for Java developers: 5 things you need to know not to fail your security

10 best practices to build a Java container with Docker - A great in-depth cheat sheet on how to build production-grade containers for Java application.

10 best practices to containerize Node.js web applications with Docker - If you’re a Node.js developer you are going to love this step by step walkthrough, showing you how to build performant and secure Docker base images for your Node.js applications.

Explore the state of open source security

Understand current trends and approaches to open source software and supply chain security.