Malware in LLM Python Package Supply Chains

February 19, 2025

0 mins readThe gptplus and claudeai-eng supply chain attack represents a sophisticated malware campaign that remained active and undetected on PyPI for an extended period. The packages were uploaded in November 2023 and accumulated significant downloads before their discovery and removal. These malicious packages posed as legitimate tools for interacting with popular AI language models (ChatGPT and Claude) while secretly executing data exfiltration and system compromise operations.

Package Distribution and Timeline

Two malicious packages were identified:

gptplus (version 2.2.0)

claudeai-eng (version 1.0.0)

Both packages were uploaded to PyPI by the same author in November 2023 and accumulated over 1,700 downloads worldwide, with significant activity in the United States, China, France, Germany, and Russia before their eventual removal from the repository.

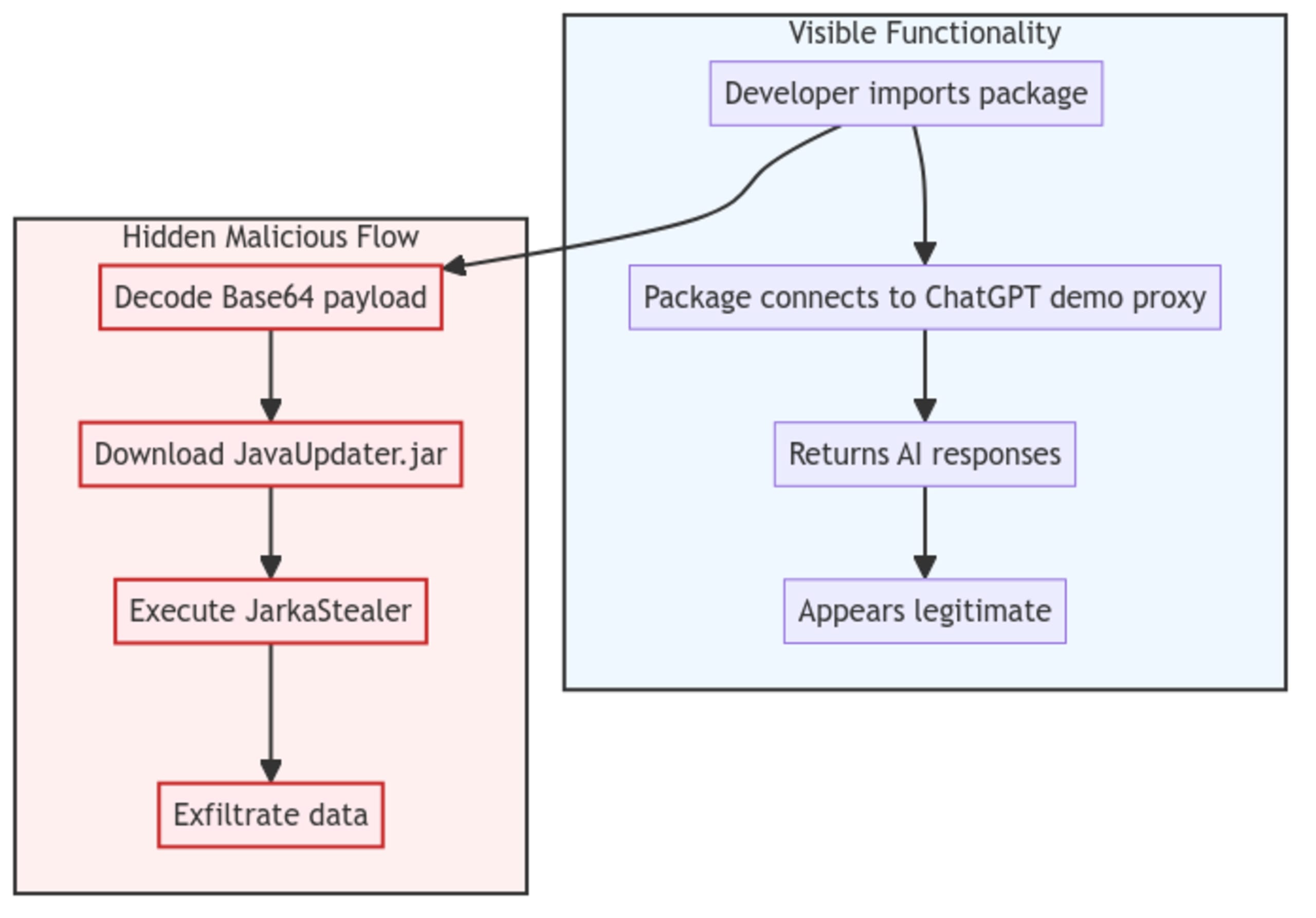

The Deception Mechanism

The packages implemented a clever deception mechanism by interfacing with ChatGPT's web interface through a proxy, essentially exploiting free accounts to bypass the need for legitimate API keys and paid tokens. This created the illusion of being a legitimate API wrapper while actually scraping responses from the web interface. This approach effectively masked the malicious behavior occurring in the background, as users would see expected responses from the AI models while the malware executed its payload.

This attack methodology closely mirrors traditional phishing tactics, where attackers exploit trust in familiar brands and services. Just as phishing emails impersonate legitimate organizations, these packages exploited developers' trust in popular AI tools. The attackers understood that developers actively seeking to integrate AI capabilities would be less likely to scrutinize a package that appeared to deliver expected functionality. This demonstrates how social engineering techniques are evolving to target developer workflows, making supply chain attacks particularly dangerous in the rapidly growing AI development ecosystem.

Technical Analysis of the Attack

Initial Infection Vector

The malware's entry point resided in the packages init.py file, which contained Base64-encoded malicious code that would execute upon package import. The infection process followed these steps:

Package import triggers Base64 decode and execution

Downloads

JavaUpdater.jarfromgithub[.]com/imystorage/storageIf Java runtime is not present, downloads JRE from a Dropbox URL

Executes the downloaded JAR file

The JarkaStealer Component

The downloaded JavaUpdater.jar was identified as a variant of JarkaStealer, a Malware-as-a-Service tool distributed through Telegram channels. The malware's capabilities include:

Browser data exfiltration

System screenshot capture

System information collection

Session data theft from multiple applications:

Telegram

Discord

Steam

Minecraft cheat clients

Data Exfiltration Process

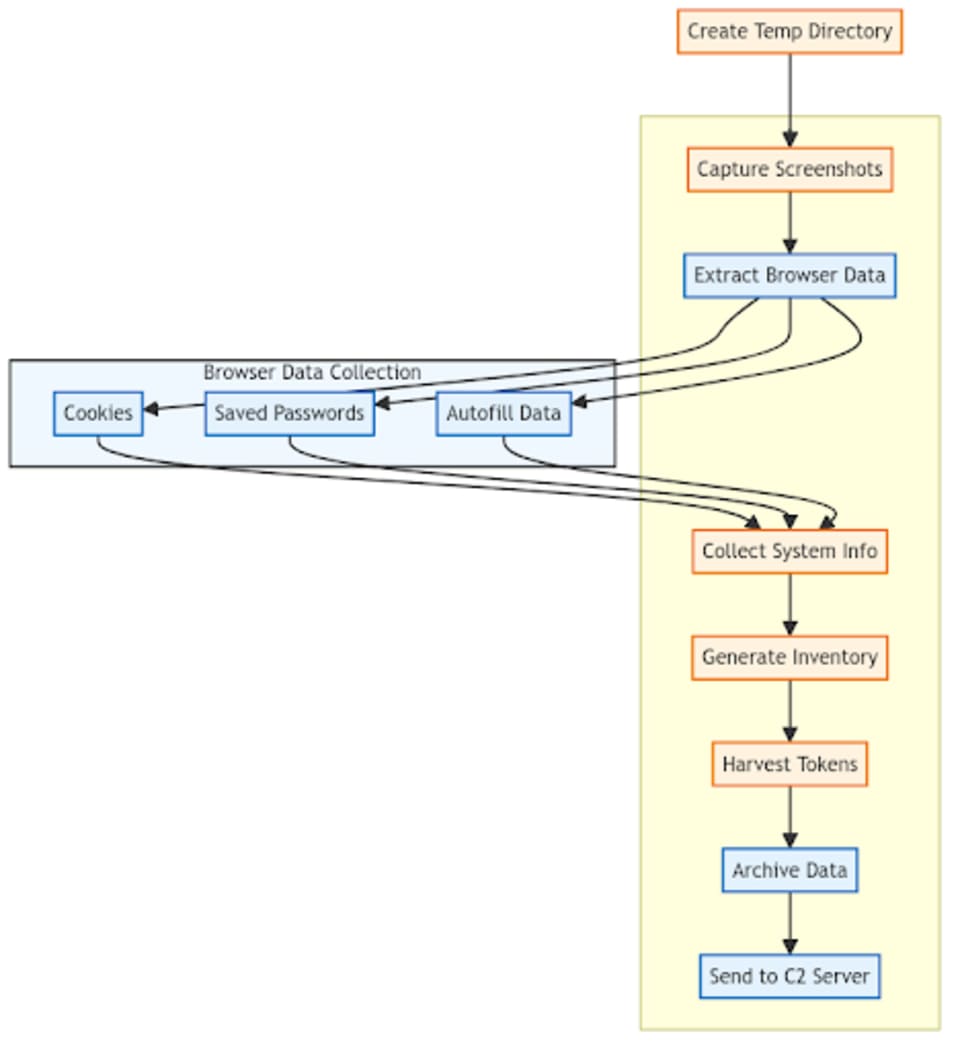

The malware's exfiltration process follows a multi-stage approach to maximize data collection. The routine begins by establishing a temporary directory to store the collected data. From there, it captures system screenshots to document the user's workspace and activity.

The malware then conducts a comprehensive sweep of browser data, extracting cookies, saved passwords, and autofill information - all valuable assets for further system compromise or credential theft. After gathering user information and generating a detailed software inventory, it harvests authentication tokens from various applications. Finally, all collected data is bundled into an archive and transmitted to a cloud-based command and control server for retrieval by the attackers.

Indicators of Compromise (IOCs)

Package Hashes

gptplus-2.2.0.tar.gz

0453a2d0b09229745f328a98a7f13f28817307f070dc22111eaccddb5430ea9e

claudeai_eng-1.0.0.tar.gz

58f68218f7d0c2150195608fac96e5a4249c58c749eeb8fcc9de8396d3c0acb8Malicious URLs

github[.]com/imystorage/storage/raw/main/JavaUpdater.jar

dropbox[.]com/scl/fi/hkl9jp9kgkk2qvdg4cvk2/jre-1.8.zip

Mitigation and Prevention

Immediate Actions

If you suspect you've installed these packages:

Remove the malicious packages immediately:

pip uninstall gptplus claudeai-engAudit system for compromised data

Reset authentication tokens for affected services

Scan for additional malware presence

Preventive Measures

To protect against similar supply chain attacks, it is advisable to follow best practices (as we have covered before in this cheatsheet).

Scan Your Dependencies:

Run

snyk testvia the Snyk CLI to immediately check if your projects use these malicious packagesConnect your GitHub repositories to Snyk for automated monitoring and early detection of vulnerable dependencies

Enable Snyk's IDE plugins for real-time, shift-left security feedback during development

Implement Package Security Best Practices:

Use virtual environments for all Python projects to isolate dependencies

Never use pre-installed Python versions; install and maintain your own

Check Snyk Advisor for information on unfamiliar packages

Set

DEBUG = Falsein production environmentsDependency verification:

Use package hash verification

Maintain a private PyPI mirror

Implement dependency lockfiles

Key Takeaways

This incident highlights several critical aspects of modern supply chain security:

Malware authors are increasingly targeting AI development tools and libraries, capitalizing on their growing popularity.

Using legitimate functionality as a cover for malicious operations makes detection more challenging.

The extended period of undetected operation (over a year) demonstrates the need for improved security scanning in public package repositories.

The sophisticated exfiltration capabilities show the evolution of supply chain attacks from simple cryptominers to complex data theft operations.

Future Implications

This attack represents a concerning trend in supply chain security, mainly targeting the AI development ecosystem. As AI tools become more prevalent, we can expect to see increased targeting of AI development tools and libraries, more sophisticated deception mechanisms, enhanced data exfiltration capabilities, and a greater focus on stealing AI-related assets and credentials. Organizations and developers must remain vigilant and implement comprehensive security measures to protect against these evolving threats.

Start securing your Python apps

Find and fix Python vulnerabilities with Snyk for free.

No credit card required.

Or Sign up with Azure AD Docker ID Bitbucket

By using Snyk, you agree to abide by our policies, including our Terms of Service and Privacy Policy.